Getting started with Speech-to-text from Azure Speech Services

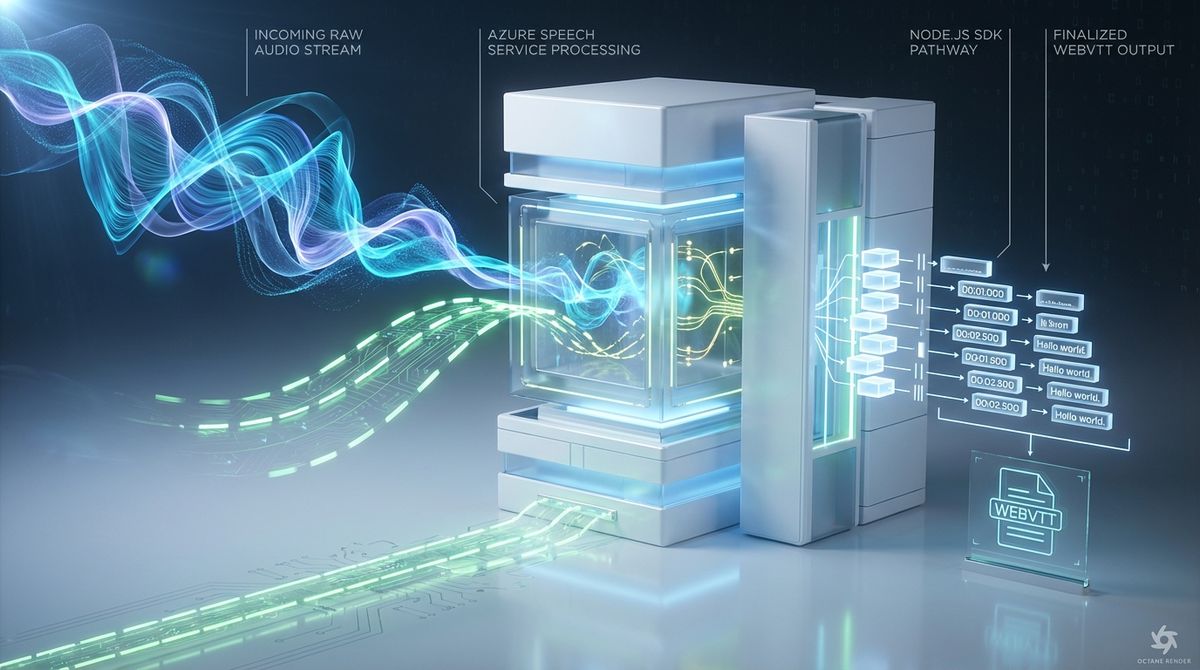

I few days ago I needed a tool to get the subtitle for a video and I took the opportunity to play around with Azure Speech Services, especially with the Speech to Text service. Now, it's time for me to share a few things about this service while using it with node.js.

Azure Speech Services

Nowadays Azure provides several interesting cognitive services to play around, the Speech Services are only a part of them. As the name said, it groups all the services related with speech, such us converting audio to text as well as text to speech. Additionally, it provides real-time speech translation. These kind of services are a key element to have when you are working with things like Bot Framework.

In this post, I will focus on the speech-to-text service which enables real-time transcription of the audio streams into text. It provides a wide support of languages even for more advance features like the customization.

So, let's start. First, create a Speech Service and get the service subscription key. I will not cover that in this post as you can follow the official documentation which also provide some free options to test the service.

Using speech-to-text from node.js

Let's start by creating the package.json file with npm init -y. Now, let's add Speech SDK as dependency with npm i --save microsoft-cognitiveservices-speech-sdk.

After having the dependencies, we need to create an index.js file where we will have the code that calls the service. Let's start adding the code required to initialize and configure the service based on one of the sdks samples.

First, we will add the dependencies. We need the Speech SDK and fs to read the audio file.

const sdk = require('microsoft-cognitiveservices-speech-sdk');

const fs = require('fs');

Now, let's add a few constants with the base configuration like the subscription key that you created before, the service region where you create it and the file name along with the language of that audio.

const subscriptionKey = '{your-subscription-key-here}';

const serviceRegion = '{your-service-region-here}'; // e.g., "westus"

const filename = 'your-file.wav'; // 16000 Hz, Mono

const language = 'en-US';

We need to create the recognizer instance, in order to do that, we will call new sdk.SpeechRecognizer(speechConfig, audioConfig), but for that, we will need to create speech config and the audio config first. For creating the audio configuration, let's add the following function which create a push stream and then read the audio file and write it to push stream.

function createAudioConfig(filename) {

const pushStream = sdk.AudioInputStream.createPushStream();

fs.createReadStream(filename)

.on('data', arrayBuffer => {

pushStream.write(arrayBuffer.slice());

})

.on('end', () => {

pushStream.close();

});

return sdk.AudioConfig.fromStreamInput(pushStream);

}

Now, let's create the recognizer instance by creating the audio configuration and adding the code to create the speech config from the subscription as well as configuring the language.

function createRecognizer(audiofilename, audioLanguage) {

const audioConfig = createAudioConfig(audiofilename);

const speechConfig = sdk.SpeechConfig.fromSubscription(

subscriptionKey,

serviceRegion

);

speechConfig.speechRecognitionLanguage = audioLanguage;

return new sdk.SpeechRecognizer(speechConfig, audioConfig);

}

let recognizer = createRecognizer(filename, language);

To use the recognizer you have two options, the first one is using the recognizeOnceAsync method. This is the easiest one but it's only for short audios (i.e. 15segs).

recognizer.recognizeOnceAsync(

result => {

console.log(result);

recognizer.close();

recognizer = undefined;

},

err => {

console.trace('err - ' + err);

recognizer.close();

recognizer = undefined;

}

);

The second one, is to use the startContinuousRecognitionAsync method, which enables you to work with larger streams by hooking to events that are triggered for each phrase recognized.

recognizer.recognized = (s, e) => {

if (e.result.reason === sdk.ResultReason.NoMatch) {

const noMatchDetail = sdk.NoMatchDetails.fromResult(e.result);

console.log(

'(recognized) Reason: ' +

sdk.ResultReason[e.result.reason] +

' | NoMatchReason: ' +

sdk.NoMatchReason[noMatchDetail.reason]

);

} else {

console.log(

`(recognized) Reason: ${sdk.ResultReason[e.result.reason]} | Duration: ${

e.result.duration

} | Offset: ${e.result.offset}`

);

console.log(`Text: ${e.result.text}`);

}

};

recognizer.canceled = (s, e) => {

let str = '(cancel) Reason: ' + sdk.CancellationReason[e.reason];

if (e.reason === sdk.CancellationReason.Error) {

str += ': ' + e.errorDetails;

}

console.log(str);

};

recognizer.speechEndDetected = (s, e) => {

console.log(`(speechEndDetected) SessionId: ${e.sessionId}`);

recognizer.close();

recognizer = undefined;

};

recognizer.startContinuousRecognitionAsync(

() => {

console.log('Recognition started');

},

err => {

console.trace('err - ' + err);

recognizer.close();

recognizer = undefined;

}

);

With this approach, we can process streams in real time without issues.

The Web Video Text Tracks Format (WebVTT)

Now, as I mentioned at the beginning of this post, I wanted to create the subtitles for a video. For that, I need to write the text to a file using a known format. In this case, I choose WebVTT as it's simple to write. The format is basically the following.

WEBVTT

00:01.000 --> 00:04.000

Never drink liquid nitrogen.

00:05.000 --> 00:09.000

- It will perforate your stomach.

- You could die.As you saw before, we have the offset time and the duration we only need to format the number. Note that the number are nano seconds so you can use something like the following to obtain the correct format.

function parseTime(nano) {

let hour = Math.floor(nano / 36000000000);

const temp = nano % 36000000000;

let minute = Math.floor(temp / 600000000);

const temp2 = temp % 600000000;

let second = Math.floor(temp2 / 10000000);

let mil = temp2 % 10000000;

hour = hour.toString();

minute = minute.toString();

second = second.toString();

mil = mil.toString().slice(0, 3); //cuts off insignificant digits

return `${hour}:${minute}:${second}.${mil}`;

}

With this, you need to open a file and then just replace the console.log call in the recognized event listener with the following.

outputStream.write(

`${parseTime(e.result.offset)} --> ${parseTime(

e.result.offset + e.result.duration

)}\r\n`

);

outputStream.write(`${e.result.text}\r\n\r\n`);

Note that the file needs to start with WEBVTT so you the code would be something like the following.

const outputStream = fs.createWriteStream(outputFile);

outputStream.once('open', () => {

outputStream.write(`WEBVTT\r\n\r\n`);

let recognizer = createRecognizer(filename, language);

// ... the rest of the code here

});

But... I have a video, not an audio file

Everything is fantastic, but, as I mentioned, I had a video, not an audio file. In this kind of scenarios, we need to perform a process before sending the file to the service. I choose ffmpeg and a simple wrapper to extract the audio named ffmpeg-extract-audio.

First, install the dependency with npm i --save ffmpeg-extract-audio. Then, you will need to download ffmpeg and make it available to run from a terminal. You can also add it to a new folder named bin inside your project folder.

Once you setup ffmpeg, add the following code to extract the audio with one channel and frequency of 16hz from the video file.

const extractAudio = require('ffmpeg-extract-audio');

//...

extractAudio({

input: 'input-video.mp4',

output: 'your-file.wav',

transform: cmd => {

cmd.audioChannels(1).audioFrequency(16000);

},

}).then(() => {

console.log('Sound ready');

// Here all the previous code

});

Summing up

I think that this is a very simple and powerful example of how to use the Speech to text service and I hope that you find it the same way. These kind of Cognitive Services helps you to level up your applications in a very easy way, they are a great tool to have in radar. If you want more information, go to the official documentation.

You can find the full sample in my speech-to-text-sample repository.

Note that this is just a sample of what you can do with Speech services, however, if you want better subtitles, you might want to see other services such as Microsoft Video Indexer.