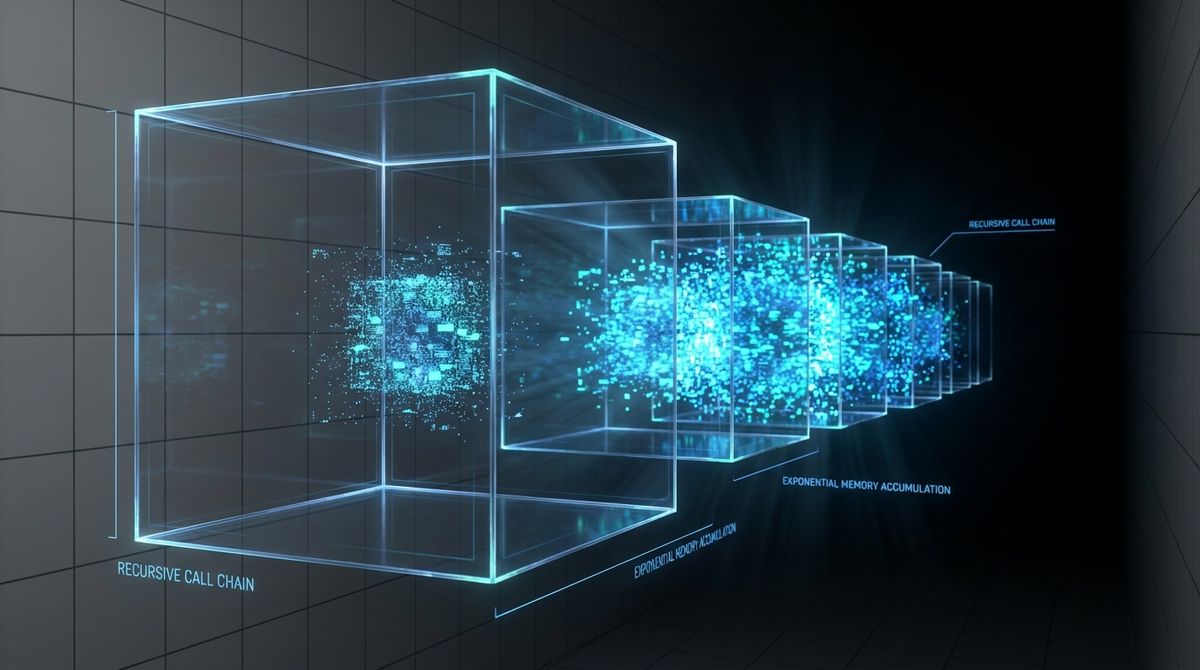

The recursion problem

I love recursion and functional programming, but you need to understand the consequences of doing some operations. A few days ago, we were seeing a node process crashing because of memory consumption. This was super strange as it was a simple process that just read some information from an endpoint and push it to another one (i.e., a simple copy operation). Just to make things a bit confusing, we were setting the memory of the container to 8gb using the --max-old-space-size=8192 parameter as part of the node execution, which implies that the simple copy process was consuming 8gb of memory.

At a first glance the code was OK. We tested small batch, and everything was working as expected and the memory consumption seems reasonable with the amount of data moving from one place to another, until we started playing with one specific part of it. To make things short, the problem was related to a listing of all the content that occurred before copying the content. Based on that finding, one might first thing that having the full list of items in memory could be the actual problem but even if we had millions of resources to be copied, as the information for each of them was around 200 bytes at the most, the result will be less than 1gb, which was far from the 8gb that the process was consuming.

The actual problem

As the title of this post might suggest, the actual problem was that the listing of those files was performed using a recursion function but that was only part of it. Let's say that we have the following code to mock a function that obtains 100 items per request.

const values = Array.from(Array(100).keys()).map(i => ({ i }));

function getRequest() {

return new Promise(res => setTimeout(res([...values]), 0));

}

The code takes advantage of the spread operator in arrays to create a copy of an original array of values from 0 to 99. So, this mocked request will just retrieve an array of 100 integers.

Now, let's have a look to a super simplified version of the problematic function.

async function getAllItems(preloadedObjects) {

const listResponse = await getRequest();

const allObjects = [...preloadedObjects, ...listResponse];

return allObjects.length < 30000 ? await getAllItems(allObjects) : allObjects;

}

This function will call the getRequest function, then it will concatenate that list with the one passed by parameter and finally if a certain condition is not met, we will call again the function. This works nice and is easy to understand but, as you might suppose, it has several issues. What is wrong with it? Being a recursive function is not the problem per se but creating a copy of the existing data each time the recursive function is called and specially passing that data to the next call, well... that might be something.

Let's go to some numbers to make it easy to visualize. In the first run, we have 100 numbers, we copied that and pass that to the next function. As we copied that result, instead of just having 100, we have 200, but it shouldn't be a big problem initially. Instead of doing a copy with the spread operator we could just use add the items to a single list, but that would not have a big impact on the overall anyway. The second and more important issue is that when you call a new function, all the variables on the current stack will be kept until the call is completed. So, we have 200 items, then it's call to the next and now we copied 200 plus the 200 for the request and just by that we added 400 items in memory to the existing 200, 600 items in total when we only read 200. We could continue with this, but I imagine that you are seeing the problem.

The solution

There are several solutions to this problem. First, in scenarios like this, you should avoid copying data all the time (specially in cases like this when it's not necessary). An easy fix here would be to join the results at the end and instead of passing the result as parameter, send just the minimum that can help with the ending condition, in this case, the sum of all the elements.

async function getAllItems(currentLength) {

const listResponse = await getRequest();

const newLength = currentLength + listResponse.length;

return newLength < 30000

? [...(await getAllItems(newLength)), ...listResponse]

: listResponse;

}

Just by doing this, without removing the spread operator the result will be completely different. To perform a quick test on this, we can create a simple node program and execute it adding the --expose-gc parameter (i.e., node --expose-gc index.js) which enable us to have some memory consumption data. For the program you can use the following code which takes advantage of this parameter using process.memoryUsage().heapUsed to obtain the memory usage.

const formatMemoryUsage = data =>

`${Math.round((data / 1024 / 1024) * 100) / 100} MB`;

const memoryData = process.memoryUsage().heapUsed;

console.log(formatMemoryUsage(memoryData));

getAllItems([]).then(a => {

console.log(a.length);

const memoryData = process.memoryUsage().heapUsed;

console.log(formatMemoryUsage(memoryData));

});

As output, you should see something like the following, which shows the amount of memory at the beginning of the process, then the number of items and finally the amount of memory at the end before the gc is called.

3.59 MB

30000

60.9 MBWhich means that we have nearly 56mb just for 30k of integers. After running the new version that reduce the amount of copy operations, you will have something like the following.

3.59 MB

30000

47.72 MBThere is still a considerable amount of data being allocated. So let's iterate once again, and remove the spread operators by replacing [ ...(await getAllItems(newLength)), ...listResponse] with a simple Array concat operation: listResponse.concat(await getAllItems(newLength)). Note that this will create a new array and will not touch the other ones, so there still a few ways to improve it by adding the items in one of the arrays instead of just creating new ones. By using the concat function you will end up in something like the following.

3.59 MB

30000

23.5 MBThis is nearly 30% of the original memory consumption. So, just with these small changes we could list triple number of items on our original problem. But let's go further, let's remove recursion and use loops instead and let's remove the remaining copies of arrays and just add the items to a result list.

async function getAllItems() {

let iteration = 0;

const listResponse = [];

let result;

do {

result = await getRequest();

result.forEach(item => listResponse.push(item));

iteration++;

} while (iteration < 300);

return listResponse;

}

By doing these changes and executing the solution again we will end up with something like the following.

3.59 MB

30000

5.34 MBJust 2mb when we had nearly 56mb before. What's more, if you move this to 300k instead, you will end up with less than 20mb where the original version will probably crash saying FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory.

Conclusions

Even when the problem and the topic might seem too simple, I found that this is not a silly error and it's related to several things, specially not fully understanding the consequences of some cool operators like the spread operator in this case and the complexity of our code. It's always better to keep your code simple and try to think on those consequences.

By the way, this was a problem in a production environment detected way long after the code was original deployed as the original scenario for this code had a smaller amount if items to be listed.